Outsourcing is a stepping stone on the way to cloud computing.

I would even say that companies with outsourcing experience can much more easily adopt cloud than others. They are already used to dealing with a service provider, and they have learned how to trust and challenge it to get the desired services. But certain criteria must be met in order to ensure that both parties get the most out of the outsourcing relationship.

According to a new study on the adoption of cloud within strategic outsourcing environments from IBM’s Center for Applied Insights, key success factors for a cloud outsourcing project are:

• Better due diligence

• Higher attention on security

• Incumbent providers

• Helping the business adjust

• Planning integration carefully

I fully agree with all of these points, but found myself thinking back 15 years when the outsourcing business was on the rise. Actually, these success factors do not differ much from the early days. A company that has already outsourced parts of its information technology (IT) to an external provider had to cover those topics already—perhaps in a slightly different manner—but still enough to understand their importance.

Let’s briefly discuss these five key topics more in detail.

Due diligence

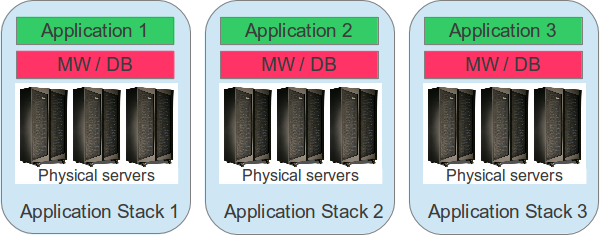

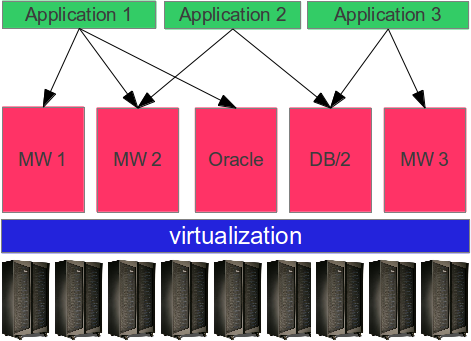

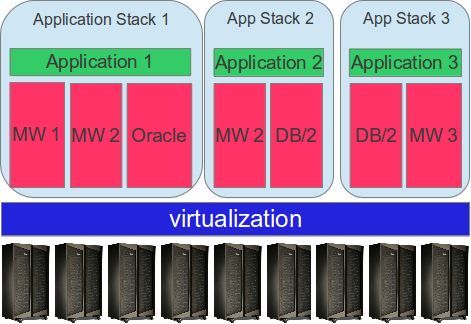

A common motivation for outsourcing is a historically grown environment, which is expensive to run. Outsourcing providers have experience in analyzing an existing environment and transforming it to a more standardized setup that can be operated for reasonable costs. Proper due diligence is key to understanding the transformation efforts and effects. For cloud computing, the story is basically identical; the only difference is the target environment, which might be even more standardized. But again, knowing which systems are in scope for the transformation and what their specific requirements are is essential for success.

Security

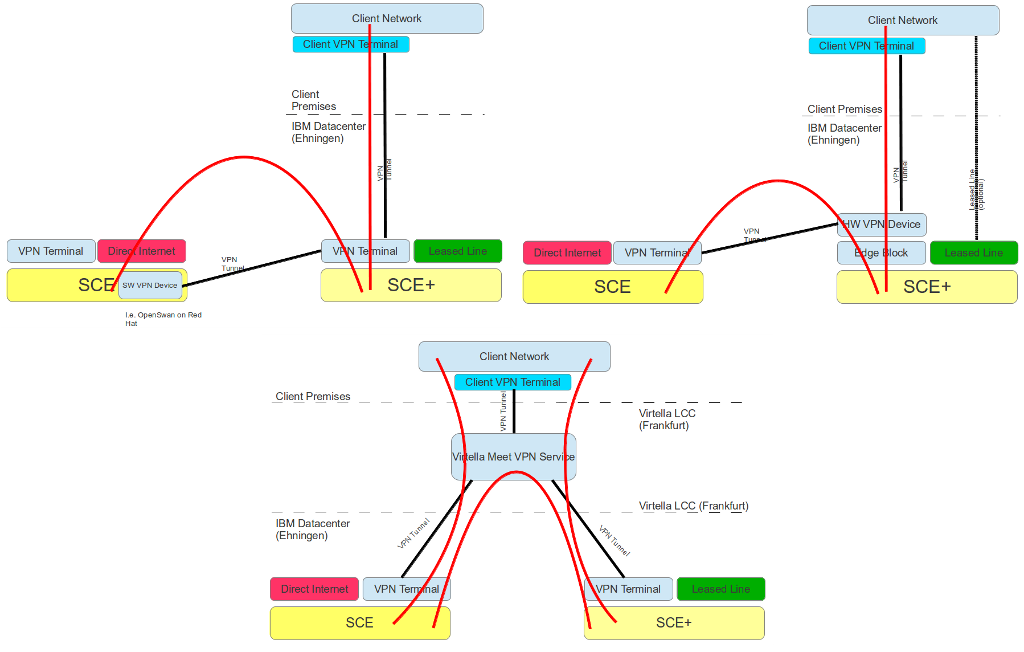

When a client introduces outsourcing the first time in its history, the security department needs to be involved early and their consent and support is required. In most companies, especially in sensitive industries like finance or health care, security policies prevent systems from being managed by a third-party service provider. Even if that is not obvious at first glance, the devil is often in the details.

I remember an insurance company that restricted traffic to the outsourcing providers shared systems in such a way that proper management using a cost effective delivery was not possible. Those security policies required adaption to reflect the service provider as a trusted second party rather than an untrusted third party. Cloud computing does bring in even more new aspects, but in general it is just another step in the same direction.

Incumbent providers

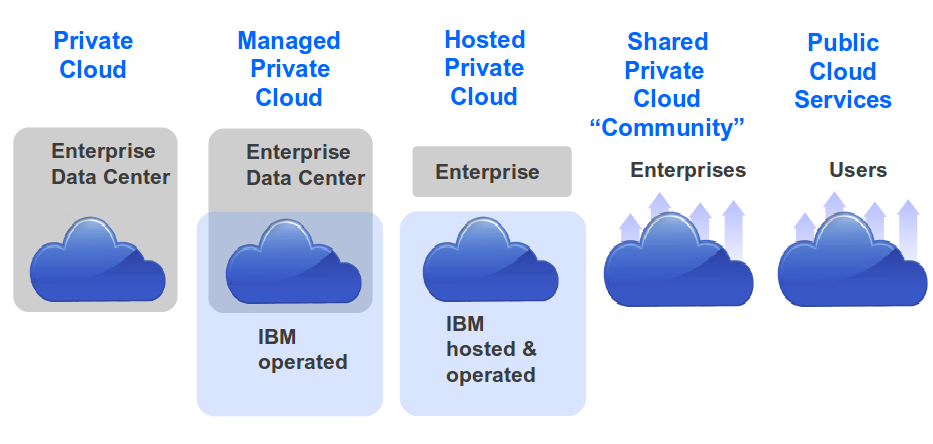

If your current outsourcing provider has proven that it is able to run your environment to the standards you expect, you might trust that it is operating its cloud offering in the same manner. Let’s look at the big outsourcing providers in the industry like IBM; they all have a mature delivery model, developed over years of experience. This delivery model is also used for their cloud offerings.

Business adjustment

In an outsourced environment, the business is already used to dealing with a service provider for its requests. Cloud computing introduces new aspects, like self-service capabilities or new restrictions because of a more standardized environment. The business needs to be prepared, but the step is by far smaller than without an already outsourced IT.

Plan integration

Again, this is a task that had to be done during the outsourcing transformation, too. Outsourcing providers have shared systems and delivery teams that need to be integrated. Cloud computing might walk one step further to even put workloads on shared systems, but that is actually not a new topic at all.

Outsourced clients are already well prepared for the step into cloud. Of course there is the one or other hurdle to take, but compared to firms still maintaining their own IT only, the journey is just another step in the same direction.

What are your thoughts about this topic? Catch me on Twitter via @emarcusnet for an ongoing discussion!