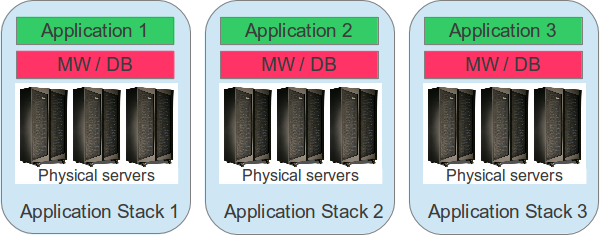

Once upon a time, applications ran on physical servers. These physical server infrastructures were sized to accommodate the application software as well as required middleware and database components. The sizing was mainly based on peak load expectations because only limited hardware upgrading was possible. This led to a very simple application landscape topology. Every application had its set of physical server systems. If an application had to be replaced or upgraded, only those server systems were affected.

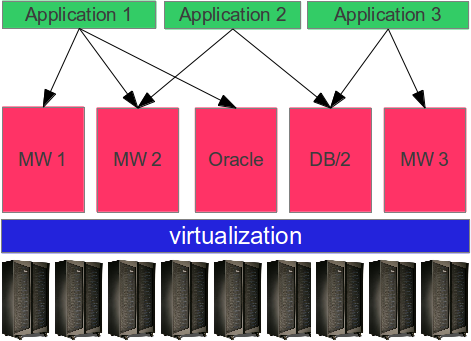

When the number of applications grew, the number of server systems reached highs which were hard to manage and maintain. Consolidation was the trend of that time. Together with virtualization technologies which gained maturity, capacity upgrades were as easy as moving a slider bar. After the consolidation of the physical layer in the late 90s and early 2000s, the middleware and database layer was consolidated. Starting around 2005 we saw database hotels and consolidated middleware stacks to provide a standardized layer of capabilities to the applications.

Although this setup helped streamlining middleware and database management, and standardizing the software landscape, it introduced a number of problems:

The whole environment became more complex. Whenever a middleware stack was changed (due to a patch or even a version upgrade), multiple applications were affected and required to be retested. Maintenance windows needed to be coordinated with all application owners, and unplanned downtimes had a high impact on a higher number of applications.

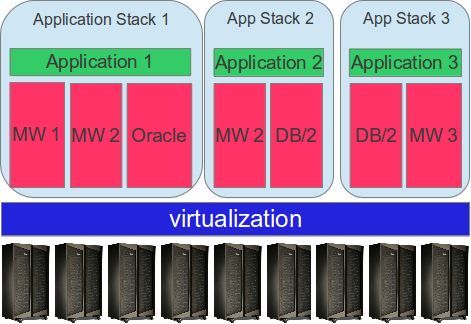

Modern cloud computing is reversing this trend again. Because provisioning and management of standard middleware and database services can be highly automated, deploying and managing a higher number of smaller server images is less effort than it was in the early days. By de-consolidating these middleware and database blocks, we gain again higher flexibility and a far less complex environment.

There is another positive side effect of this approach: When application workloads are bundled together, they can more easily being moved to a fit for purpose infrastructure. Especially when we think about a migration of some workloads into the cloud, while others will stay on a more traditional IT infrastructure, the new model helps moving these isolated workloads, without affecting others.

Summary

I am not saying that deconsolidation of database and middleware blocks is the holy grail of middleware topology architecture, but in a cloud environment it can help to get rid of complex integration problems while not introducing new ones.