Anyone remember Super Audio CD (SACD)? Or Audio DVD (ADVD)? Those formats once had a battle about which would succeed the Audio CD as the primary media for audio content. However, after the invention of MP3 and its wide distribution, disc formats became obsolete.

When Blu-ray Disc and HD-DVD had a similar battle about who would be the next primary video media, experts already talked about a war where there was nothing to win for anybody. It was predicted that the Internet would become that primary media and that hard media such as discs or tapes would no longer be of any importance. Well, Blu-ray Discs did gain some market share, but only temporarily, as we look back today. Download portals, and also IPTV and video-on-demand offerings are slowly coming and will, for sure, get their pieces of the cake.

LibreOffice 3.5.1 came out recently, and Apache OpenOffice with the help of IBM will release a new version (4.0) approximately at the end of the year. These two alternative office suites run another attack on Microsoft’s dominance of the office software sector. But is this really a battle that is worth fighting for?

First of all, this battle is almost impossible to win. Not only that Microsoft’s dominant market share is equal to a de-facto industry standard, the office documents are mainly based on Microsoft’s proprietary document formats, which are often non-disclosed and therefore hard for non-Microsoft applications to interpret correctly. I think that any success of alternative office suites raise and fall with their ability to import and export Microsoft formats properly.

But, is this really the battlefield of the future? I don’t think so.

The actual battlefield about the future of software is in the cloud!

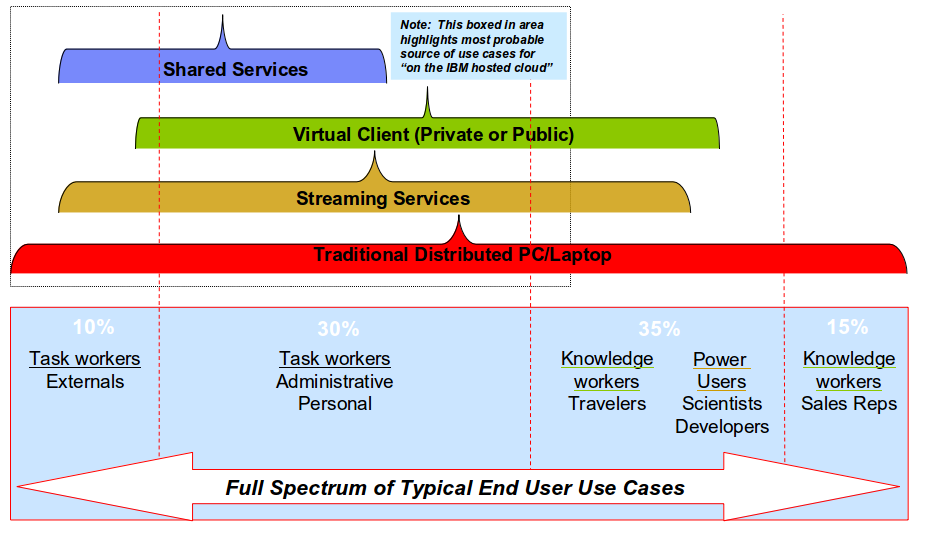

As Andreas Groth (@andreasgroth) and I mentioned in several earlier blog posts, the final goal of software evolution is to be web-based. There are several reasons for that: Web-based applications are easy to access (from any device), they are cheap to maintain and they support our new requirements in terms of collaboration and content sharing more easily as any local installed app does.

In regards to office software, all vendors had to start their development almost from zero again, which makes this race so interesting. Regardless, if we look at Microsoft’s Office 365, IBM Docs (formally known as LotusLive Symphony) or Google Docs, they all have in common that they were more or less developed from scratch. But beside the big three, there is a high momentum in that area to make applications accessible from a simple browser. Several examples are VMware AppBlast, Guacamole, and LibreOffice, which all use technology based on HTML-5.

But what will be the criteria to succeed in the cloud?

There is no doubt, that any office software needs to fulfil the productivity basics. I don’t think that cloud-based software must implement all fancy features of Office 2010, but it must enable the user to fulfil day-to-day tasks, including the capability to import and export office documents, display them properly, and run macros.

In terms of collaboration, cloud-based software needs to provide added value to any desktop- based application. It should be easily possible to share and exchange documents with coworkers.

But the most important factor will be its integration capabilities. Desktop and office workloads will not be moved into the cloud from one day to the other. There will be a certain time frame where the use of cloud-based applications start to grow, but the majority of people is still using locally installed applications. Being well integrated, both with the local installed software and also with server- based collaboration tools, will be the key factor for success. This is why I see Microsoft in a far better position than Google, although Google Docs has been around for quite some time and has started to become interesting, feature-wise.

IBM seems to be on the correct track. Its integration of IBM Docs into IBM Connections and IBM SmartCloud for Social Business (formally known as LotusLive), which can be tested in the IBM Greenhouse, looks very promising.

Summary

The new battlefield will be in the cloud, and although Microsoft did its homework, the productivity software market is changing. There are more significant solutions and vendors available than in the years before. If they play their cards right and provide good integration together with an attractive license model and collaboration features, they could get their share of (Microsoft’s) cake.