Discussing hybrid cloud is almost a never ending story; there are so many different aspects to have a closer look at and explore. In this post, I would like to focus on workloads, placement and interconnection.

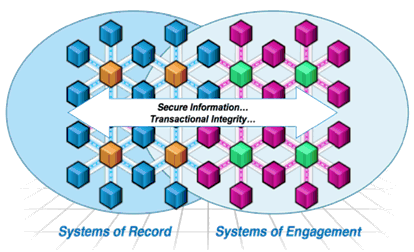

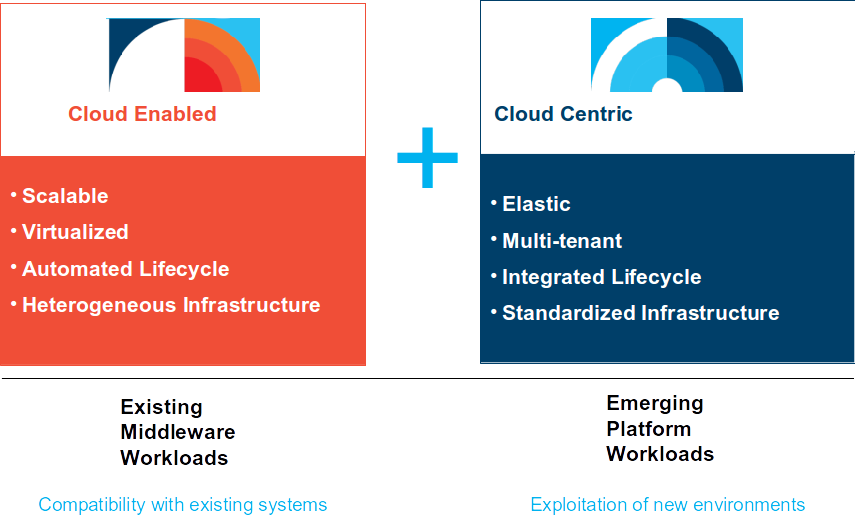

In earlier posts about workloads and proper placement on different clouds, I introduced the terms cloud enabled and cloud native. While these terms are still valid definitions, in a hybrid cloud context they evolve to the paradigms of systems of record and systems of engagement.

The main difference to the cloud enabled / cloud native approach is that we are not talking about isolated workloads that are better placed here or there, but of integrated workload components spread over infrastructures enabled by hybrid clouds.

Lets look a bit closer to this new paradigm:

Systems of record fit well on cloud-enabled infrastructures. Those workloads have specific requirements about security, performance and infrastructure redundancy. Relational databases holding sensitive data could be a good example for a workload component that is referred to as a system of record.

Systems of engagement have more the cloud native supported requirements in terms of flexibility, ease of deployment, elasticity and more. A web server farm might be a good example here.

So, what is the thrilling news?

Because of the much tighter integration of hybrid cloud environments than we saw a year ago, there are completely new possibilities for how workloads are split and distributed over the environments.

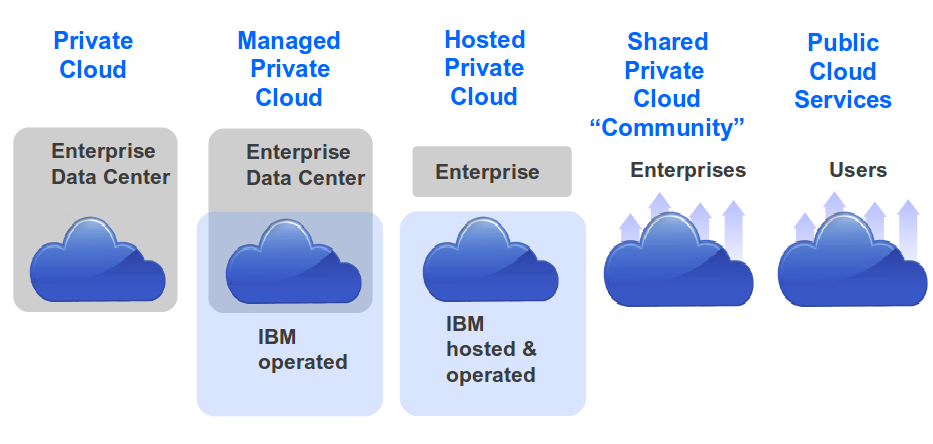

For example, if we consider a web shop application, the presentation layer can be considered a system of engagement, whereas the data layer is more likely a system of record. In a hybrid cloud, the web server farm of the presentation layer can be placed on a cloud native environment like IBM SoftLayer, but the core database cluster, holding the credit card information of the customers, might be better placed on a PCI compliant infrastructure like a private cloud or IBM Cloud Managed Services.

Another example could be a SAP system that provides web access capabilities. Again, the web facing part could be on a public cloud, but the main SAP application is certainly better to be placed on a suiting infrastructure like IBM Cloud Managed Services for SAP Applications or even a traditional IT environment.

As mentioned above, tight integration is key for success with hybrid cloud scenarios. One crucial integration aspect is networking. With the interconnection of the IBM strategic cloud data centers to the SoftLayer private network, IBM provides a worldwide high speed network backbone for all its cloud data centers to enable components on different cloud offerings to communicate to each other properly. Other aspects are orchestration and governance which I covered in my other post.

The combination of systems of record and systems of engagement bring hybrid cloud to the next evolution level. By using the best of both worlds in a single workload, placing the components on the best fitting infrastructures, hybrid cloud computing becomes even more powerful. Prerequisite is a tight integration especially in the areas network, orchestration and governance.

Don’t hesitate to continue the discussion with me on Twitter via @emarcusnet!